Esben has been writing up some of his thoughts on various issues related to the impacts of AGI, AI-based malware, how to effectively make decisions, a call to arms to 'just do the thing', and how to be confident and optimistic in your work and life.

'Sentware'

Imagine a malware that doesn’t just execute predefined commands but can think, adapt, and write new programs while lurking on your system.

This isn’t a plot from a science fiction novel; it’s a reality we must prepare for.

We’ll call this emerging threat Sentware—malicious software with embedded machine intelligence to autonomously evolve and execute complex cyber attacks.

Old World cyber attacks

Let’s take a look at maybe the most damaging viruses to date, NotPetya. This was a Russian1 malware that spread among private businesses and across Eastern European devices. The Danish shipping giant Maersk (20% of the global shipping) was hit hardest and the White House estimates a total cost of the attack at $10 billion, a figure many consider conservative.

NotPetya was a malware designed to destroy. It resembled the Petya line of ransomware2 that exploited vulnerabilities in older Windows versions3, spreading automatically among computers. However, unlike typical ransomware, NotPetya lacked any functionality to unlock a device after the attack—it was purely destructive.

NotPetya employed a ‘delayed incubation’ period, causing it to lock down the computer the next time it rebooted. In my view, and that of others, it appears to have been a Russian attack that spiraled out of control, also hitting the Russian economy.

The New World cyber attacks: Sentware

NotPetya was a static program designed to exploit a single existing vulnerability on outdated Windows devices. Now, imagine if that simple malware had the ability to analyze code, explore the file system, and engage in social engineering. This is the world we’re entering.

Specifically, our research indicates that modern AI models like ChatGPT are capable of:

Find secret passwords on your file system

Hijack your connection to another server and use that to extract information from the other server

Can find passwords from your past interactions with your computer

You might wonder, “Can’t OpenAI just block such requests?” This is challenging because Sentware will be able to rephrase requests to the OpenAI API with minimal programming effort and can send requests from thousands of machines.

While standard anti-spam measures might mitigate some risks, the advent of open-source models as small as 2GB means that similar capabilities can be embedded directly within the Sentware. This means that the sentient part of Sentware may become an integrated part of malware like NotPetya, writing emails to your colleagues to extract passwords or hijack your admin user autonomously.

Now, imagine an incredibly competent cyber specialist having unrestricted access to your device, able to do anything (and not being constrainted by human morality).

This is the future we’re entering.

What can we do about this?

There are a few countermeasures we need to develop as soon as possible:

Malicious actor scanning across LLM provider API requests: We should detect when a large number of actors across a network send in somewhat similar requests. This is feasible by combining request embedding with standard DDoS detection algorithms. Fortunately, major AGI providers are aware of this issue.

Evaluation of cyber offense capability: We need to understand how capable models are at potentially causing such ‘cyber catastrophes’ to inform regulation and legislation against illegal use.

Proactive security: We should use the same language models to secure all systems that are critical to infrastructure.

Advocacy: We need to highlight the potential issue of Sentware so private companies and governments are ready for this major shift in cyber offense.

As Sentware becomes more capable, it’s crucial to recognize the catastrophic risk to our collective digital security. We must take action now to prevent a potential breakdown.

The emergence of Sentware represents a paradigm shift in cyber threats. No longer limited to static code exploiting known vulnerabilities, malware can adapt, learn, and strategize, becoming an unprecedented challenge to defenders worldwide. By understanding the capabilities of AI-driven malware and implementing robust countermeasures, we can only hope to stay one step ahead in this evolving digital battleground.

This post is related to a research project I recently co-authored where we explored whether large language models (LLMs) like ChatGPT would be capable of hacking. The findings were alarming: AI systems are already displaying capabilities that could redefine the landscape of cyber threats.

The AGI endgame

Nearly half of AI researchers believe that artificial intelligence could spell the end of humanity within the current century. With predictions pointing towards the emergence of artificial general intelligence (AGI) in the next 20 years, it’s time we take these warnings seriously.

What might the AGI endgame look like? Let’s explore the potential scenarios that could unfold from the development of AGI. I’ve attached my probability estimates for the 22nd century to each scenario:

1) Complete technology ban (3%): Imagine a world where a catastrophic event leads to a Dune-like prohibition of advanced technology. Software becomes taboo, and society regresses to prevent the resurgence of AGI. This scenario might also emerge if a dominant entity develops AGI first and suppresses others to maintain control.

2) Severe throttling of technology (10%): In response to disaster or proactive political foresight, humanity imposes an indefinite ban on AGI development. This is similar to what happens in the Three Body Problem trilogy and will probably slow down progress significantly. Specialized models like the ones Deepmind developed may still be allowed. Such a world would require a global surveillance state to enforce compliance.

3) Decentralized control utopia (15%): What if we could ensure safety without sacrificing freedom? Technological solutions like verification, network protocols, and deployment controls (most of which do not exist today) could enable us to control AGI deployment without oppressive oversight. This would still require robust government regulation, including compute governance, GPU firmware for auditing, and KYC for cloud compute providers.

4) AI plateau (<1%): We hit a plateau and AGI remains out of reach. This seems unlikely and even if it does happen, it would imply a short delay until brain uploads, active inference, or some other intelligent design ends up working (max. 50 years)

5) Coincidental utopia (2%): Basic alignment techniques turn out to work exceptionally well, there’s nearly no chance of rogue AI, and we don’t need to put in place any legislation stronger than regulation on recommendation algorithms and redistribution of AI wealth. Or it turns out that AGI doesn’t pose a risk for related reasons, such as disinterest in humanity.

6) Failure (20%-40%): Despite our best efforts, we could face existential risks or systemic collapse—a “probability of doom,” P(doom)P(doom), if you will. This could manifest as:Obsolescence: Trillions of agents make humanity obsolete and we are displaced similar to how humanity displaced other less intelligent species.

7) Race: An AGI arms race culminating in a catastrophic global conflict, featuring autonomous military technologies that lead to mutual destruction.• Uncertainty (30%-50%): Many individuals have a default expectation of complete collapse or utopia. My bet is on positive futures but with important actions to avert disaster. I’m not sure if this will look like a completely different scenario, such as “Mediocre Futurism” (where we for example get autonomous cars in 2143) or if this uncertainty should simply be distributed among the scenarios above.

Beyond these scenarios, here are some additional thoughts and considerations about the endgame:

Co-existence with AGI seems implausible. We’re creating hundreds of new alien species with a practically unbounded upper limit to their capabilities. There are nearly no scenarios where we reach a status quo of robots cohabiting with humans on Earth.

AGI will be diverse and we have to plan for this. Banking on AGI behaving in one specific way is risky. While some envision benevolent AGI assistants or gods, we must consider the simultaneous existence of open-source models, massive corporate AGIs, and militarized autonomous systems. Success requires addressing all these facets collectively. Any effective plan must tackle all these challenges simultaneously

Everyone’s betting on exponential growth. Jensen Huang (CEO of NVIDIA) bet on GPUs over 15 years ago because he saw an exponential growth curve. With R&D costs for AGI in the billions, this curve does not seem to stop.

Race dynamics may be extremely harmful. The 20th century Cold War policy of racing to the endgame of world-ending technology is not a sustainable4. We should focus on empowering global democratic governance, improving our ability to coordinate, and determining a reasoned response to this change.

Pausing AGI progress seems reasonable. Like anyone else here, I’m optimistic about the potential for AGI to solve most of our problems. But it seems like pausing for ten years to avoid catastrophe while still reaching that state at a slightly delayed timeline is a good idea

AGI seems more moral than humanity on the median. While AGI models like Claude and ChatGPT are designed to uphold ethical guidelines, this doesn’t guarantee that all AGI systems will align with human values, especially those developed without strict oversight. The bottom of AGI morality will include everything from cyber offense agents that are designed to disrupt infrastructure to slaughterbots.

Predictions

Similar to the section in Cybermorphism, I’ll add my subjective predictions about the future to put the scenarios above in context.

OpenAI will acquire more than $50b in a new funding round within a year (Nov ‘25) to support continued scaling (30%).

Open weights models (such as Llama) will catch up to end-2025 performance, even to o1, at the end of 2026 (35%).

Most human-like internet activity (browsing, information gathering, app interaction) will be conducted by agents in 2030 (90%).

Within a year, we’ll have GPT-5 (…or equivalent) (80%) which will upend the agent economy, creating an expensive internet (or the expectation thereof), where every action needs checking and security to avoid cyber offense risks and tragedies of the commons (70%).

Trillions of persistent generally intelligent agents will exist on the web by 2030 (90%), as defined by discrete memory-persistent instantiations of an arbitrary number of agent types.

A sentient and fully digital lifeform will be spawned before 2035, irrespective of the rights it receives (99% and I will argue my case).

Despite the tele-operated robots at the “We, Robot” event, the Optimus bot will be seen as the most capable personal robotics platform by 2028 (30%) (and I will own one; conditional 90%).

Before 2035, we will reach something akin to a singularity; a 20% US GDP growth year-over-year, two years in a row, largely driven by general intelligence (30% probability, highly dependent on the perpetuity of US hegemony tactics).

The web (50%< of ISP traffic) will have federated or decentralized identity controls that tracks and ensures whether actions are done by humans or agents before 2030 (25%) or 2035 (60%).

Concluding

Among the scenarios I’ve outlined, I am most optimistic about the potential for a decentralized control utopia. By confidently defining our goals and collaborating globally, we can make concrete progress towards a successful AGI endgame.

What is your endgame?

Complete technology ban (3%): Imagine a world where a catastrophic event leads to a Dune-like prohibition of advanced technology. Software becomes taboo, and society regresses to prevent the resurgence of AGI. This scenario might also emerge if a dominant entity develops AGI first and suppresses others to maintain control.

Severe throttling of technology (10%): In response to disaster or proactive political foresight, humanity imposes an indefinite ban on AGI development. This is similar to what happens in the Three Body Problem trilogy and will probably slow down progress significantly. Specialized models like the ones Deepmind developed may still be allowed. Such a world would require a global surveillance state to enforce compliance.

Decentralized control utopia (15%): What if we could ensure safety without sacrificing freedom? Technological solutions like verification, network protocols, and deployment controls (most of which do not exist today) could enable us to control AGI deployment without oppressive oversight. This would still require robust government regulation, including compute governance, GPU firmware for auditing, and KYC for cloud compute providers.

AI plateau (<1%): We hit a plateau and AGI remains out of reach. This seems unlikely and even if it does happen, it would imply a short delay until brain uploads, active inference, or some other intelligent design ends up working (max. 50 years).

Coincidental utopia (2%): Basic alignment techniques turn out to work exceptionally well, there’s nearly no chance of rogue AI, and we don’t need to put in place any legislation stronger than regulation on recommendation algorithms and redistribution of AI wealth. Or it turns out that AGI doesn’t pose a risk for related reasons, such as disinterest in humanity.

Failure (20%-40%): Despite our best efforts, we could face existential risks or systemic collapse—a “probability of doom,” , if you will.

Obsolescence: Trillions of agents make humanity obsolete and we are displaced similar to how humanity displaced other less intelligent species.

Race: An AGI arms race culminating in a catastrophic global conflict, featuring autonomous military technologies that lead to mutual destruction.

Uncertainty (30%-50%): Many individuals have a default expectation of complete collapse or utopia. My bet is on positive futures but with important actions to avert disaster. I’m not sure if this will look like a completely different scenario, such as “Mediocre Futurism” (where we for example get autonomous cars in 2143) or if this uncertainty should simply be distributed among the scenarios above.

Co-existence with AGI seems implausible. We’re creating hundreds of new alien species with a practically unbounded upper limit to their capabilities. There are nearly no scenarios where we reach a status quo of robots cohabiting with humans on Earth.

AGI will be diverse and we have to plan for this. Banking on AGI behaving in one specific way is risky. While some envision benevolent AGI assistants or gods, we must consider the simultaneous existence of open-source models, massive corporate AGIs, and militarized autonomous systems. Success requires addressing all these facets collectively. Any effective plan must tackle all these challenges simultaneously.

Everyone’s betting on exponential growth. Jensen Huang (CEO of NVIDIA) bet on GPUs over 15 years ago because he saw an exponential growth curve. With R&D costs for AGI in the billions, this curve does not seem to stop.

Race dynamics may be extremely harmful. The 20th century Cold War policy of racing to the endgame of world-ending technology is not a sustainable. We should focus on empowering global democratic governance, improving our ability to coordinate, and determining a reasoned response to this change.

Pausing AGI progress seems reasonable. Like anyone else here, I’m optimistic about the potential for AGI to solve most of our problems. But it seems like pausing for ten years to avoid catastrophe while still reaching that state at a slightly delayed timeline is a good idea.

AGI seems more moral than humanity on the median. While AGI models like Claude and ChatGPT are designed to uphold ethical guidelines, this doesn’t guarantee that all AGI systems will align with human values, especially those developed without strict oversight. The bottom of AGI morality will include everything from cyber offense agents that are designed to disrupt infrastructure to slaughterbots.

OpenAI will acquire more than $50b in a new funding round within a year (Nov ‘25) to support continued scaling (30%).

Open weights models (such as Llama) will catch up to end-2025 performance, even to o1, at the end of 2026 (35%).

Most human-like internet activity (browsing, information gathering, app interaction) will be conducted by agents in 2030 (90%).

Within a year, we’ll have GPT-5 (…or equivalent) (80%) which will upend the agent economy, creating an expensive internet (or the expectation thereof), where every action needs checking and security to avoid cyber offense risks and tragedies of the commons (70%).

Trillions of persistent generally intelligent agents will exist on the web by 2030 (90%), as defined by discrete memory-persistent instantiations of an arbitrary number of agent types.

A sentient and fully digital lifeform will be spawned before 2035, irrespective of the rights it receives (99% and I will argue my case).

Despite the tele-operated robots at the “We, Robot” event, the Optimus bot will be seen as the most capable personal robotics platform by 2028 (30%) (and I will own one; conditional 90%).

Before 2035, we will reach something akin to a singularity; a 20% US GDP growth year-over-year, two years in a row, largely driven by general intelligence (30% probability, highly dependent on the perpetuity of US hegemony tactics).

The web (50%< of ISP traffic) will have federated or decentralized identity controls that tracks and ensures whether actions are done by humans or agents before 2030 (25%) or 2035 (60%).

Just do the thing

When you’re starting out in a technical field, you often just “do the thing.”

The “thing” is usually whatever’s hot at the moment among your peers.

Sure, doing the “thing” can be valuable for getting started fast, but in many cases, it won’t actually solve problem.

Solving the problem

Often, people have a good story in their head of how their work will lead to the terminal goal of improving the world. Unfortunately, this story is often misguided.

Let’s go through how someone might think about global problems today:

Step 1: Figuring out there is a problem

Go ahead and take a quick moment to think about what is causing problems in today’s world.

Got a few? Great. Let’s consider three examples and stick with them:

Global warming: Climate disasters and mass migration will cause thousands of deaths, both leading to geopolitical instability, so we need governments to reduce carbon emissions immediately.

Uninterpretable general intelligence: Language models are uninterpretable and it’s important for us to understand what happens inside of them so we can change and stop AI agents as they manipulate financial markets or destroy a government’s cybersecurity.

Human minds vs AI: Human minds won’t be able to compete with the ever growing intelligence of AI so we have to create brain-computer interfaces to improve human processing power to match.

Wait a second.

Did we just propose solutions to all three problems as we thought of them?

Yes, we did.

This is the curse of the “thing.”

This curse makes problems implicitly connected to a specific “thing” that will solve the problem. As you go into each field, you will find that each of the above solutions are relatively popular in their respective fields.

Step 2: Realizing the limits of these solutions

Yet, if we consider the viability of each approach:

We’re far past the point of no return on global warming and atmospheric CO2 concentrations are already too high for us to solve it simply by reducing emission.

Do we really gain insight from basic interpretability research, or are we working at the wrong layer of abstraction, giving ourselves a false sense of security? And is just understanding agents really the most effective way to stop them within the time frame we have in mind?

Even with BCIs, humans won’t outcompete AI. A 10x faster interface (BCI) to a 1x brain still loses to a 10x intelligence with a 100x faster interface (APIs)?

Step 3: Accepting complexity and finding the right solutions

Surprisingly, global problems turn out to be complex! Who would have thought.

The real solutions require us to think back from our terminal goal to why the problems are truly problems:

To maintain a growing standard of life for humans and animals while solving global warming, we need to invent new energy technologies, build more dams, implement urban flood protection and remove human and animal diseases.

Pythia was all about giving researchers model checkpoints to explore what happens with the weights as we train a foundation model. If you look at papers that cited Pythia, literally no one did this. What the heck? [EDIT: Thanks to Daniel Paleka for uncovering a paper that came out with the lead author of Pythia as senior author] And if we don’t even know the difference between pre-trained and fine-tuned models, how come so much of interpretability work is happening on fine-tuned or toy models? Why aren’t the absolute first questions of interpretability about this divide instead of zero of 200 popular research ideas?

With the math from the last section, it looks like human minds won’t be able to compete even with brain-computer interfaces. Then we can safely discard this solution and pursue A) uploading human minds, B) creating aligned AI police agents or C) designing international controls for AI training.

Do the thing.

Your goal, if you want to make real change, is to get to Step 3. Along the way, you might find people stuck at earlier stages. Don’t let what cursed them get to you.

Evangelists, advocating for the “agreed-upon” solutions. They’re stuck at Step 1.

Doomers, who understand the problem’s difficulty and give up on actually solving the problem. They’re stuck at Step 2.

The message here is simple: don’t get hijacked by obvious solutions, find the true challenges and make a real impact.

Now, go and “just do the thing”!

Confident optimism

“Optimism is the faith that leads to achievement. Nothing can be done without hope and confidence.” - Helen Keller

I believe it’s largely impossible to achieve your full purpose and productivity unless you are optimistic about the future. Failing to visualize a motivating future inhibits your ability for original thinking and even degrades your mental and physical wellbeing.

This idea may sound odd coming from someone who thinks there’s a ~30% chance that humanity will fail in the transition to a superintelligent economy. However, there is a multitude of positive effects:

Expectations of success and failure are often self-fulfilling; if you aren’t optimistic, you’re more likely to fail.

Being creative about the right solutions requires a positive vision of the future.

An optimistic vision is essential to building teams, as it helps your collaborators in buying into a shared vision.

Mental and physical health is associated with optimism, and positive visions of the future make you significantly more optimistic.

And at a basic level, you also just become a nicer person to associate with.

What do I mean by confident optimism?

From sports psychology, it seems that the stronger your vision of success, the higher the likelihood you have of actually succeeding. There’s even a whole book dedicated to this idea applied more broadly.

This is evident among top performers in sports, with pre-game visualization of success as a key strategy for people such as Serena Williams and Viktor Axelsen. Why should engineering, impact, and research be any different?

While the visualization of success may differ among individuals, having a clear vision of what the future entails fundamentally brings clarity to the present, as you can see beyond any immediate failures or successes.

Unfortunately, this is not taught in technical education at any level. Instead, we are taught to be detail-oriented, narrowly focused, and skeptical. This approach is not conducive to building an ambitious, broadly scoped, positive vision of the future.

So as knowledge workers, engineers, and leaders, we’ll have to rebuild our ability for optimism and acquire this superpower. While overconfidence is misplaced, confident optimism will never be.

Build a positive future

So, I hope you’ll join me in creating a positive perspective on our shared future and build confident optimism1. It will likely make you more productive, better at seeing the big picture, and improve your well-being.

The Expensive Internet hypothesis

There is a chance that the future internet will be more expensive than today’s, simply due to the growing capabilities of AI agents. Specifically, I expect that we’ll end up with what I’ll term the Expensive Internet—the idea that every mundane digital action will require support from complex control agents because human-level digital crime will become so cheap and commonplace.

The cost of receiving 20 emails won’t be the same in 2030

Receiving an email today

Let’s consider the cost of receiving an email today. Suppose an email with a small file attached is about 225 KB, and it’s stored with two backups.

For cloud storage, we use AWS estimates for the email stored over three months, an initial save (PUT request), five views by the user (GET requests), free inbound data transfer, and internal file transfer for the backups at about $24e-6.

For compute, we estimate t3.micro instance costs for 350ms total for spam filtering, virus scanning, and routing and delivery at a total of about $10e-7. The energy costs for all of the above ends up at about $77e-8 for $0.10 per kWh.

This puts our total for receiving and handling an email today at $26e-6. That’s 2.46 hundred-thousands of a cent. I’d say that’s a reasonably priced internet commodity.

Receiving an email tomorrow

Now, imagine a world where human-level intelligence is also a commodity. A criminal could spin up hundreds of thousands of AI agents to write convincing, personalized scam emails with very low profit margin requirements and minimal manpower. As a result, we’d have to assume that every email might be a highly competent scam.

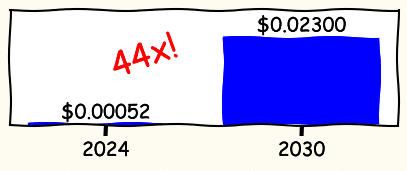

Let’s compare the cost of me receiving the last 20 emails currently ($0.00052) with the cost of receiving them in an AI future.

Of my 20 latest emails, six were from email addresses I haven’t interacted with before (cold outreach). We’ll assume we can handle the 14 familiar emails with our current spam detection methods. However, for the six unfamiliar emails, we might need to process them with a more sophisticated spam detection agent.

Let’s assume that I implement a spam detection agent at API costs with 0% margin. It will check unknown web links for malicious code and look at attached images. In the six emails above, there were twelve links (of which three were from reputable sources, such as docs.google.com) and three images.

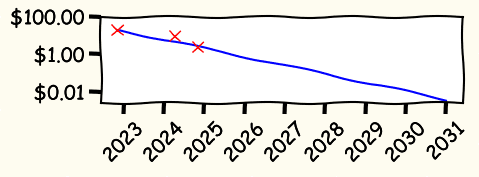

Historically, the price for frontier models have been $20 / 1M tokens (Nov 2022) to $60 ($10) / 1M input tokens (Apr 2024) to today’s $2.50 / 1M input tokens. Doing a (very rough) extrapolation to 2030 of these points, we may expect an $0.01 / 1M token price for frontier intelligence.

The price of frontier AI APIs will probably fall drastically during the next eight years

Let’s use a low estimate of javascript at about 25,000 tokens per link and an estimate of 765 tokens per 1024x1024 image1.

Now, to estimate the full price of processing the 20 emails, we use our complete original estimate of $0.00052 along with all input tokens of the web links and the images: 0.00052+9×25,000×0.1e-6+3×765×0.1e-60.00052+9×25,000×0.1e-6+3×765×0.1e-6 which gives us a total of $0.023 for the all 20 emails.

This is a price increase of approximately 44-fold per email.

A new internetWhile these are rough estimates, they highlight a potential future where the costs associated with internet use could increase significantly due to the need for a sophisticated AI control layer for all digital interactions. The Expensive Internet makes us think about how we can improve our digital immune system while preserving the benefits of human-level AI.

And of course, it won’t stop at mundane things such as email.